Who is at your conference

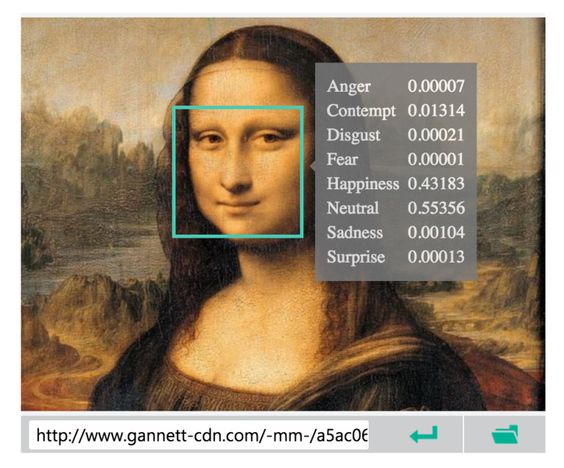

While researching my presentation on the Azure Container Service I came up with the idea to see what people where tweeting about the conference I was going to attend. Now for this I don’t even need to write code. The app in my phone can do this. But what other data is available from the Twitter API and to take it even further. Why not let the Microsoft Cognitive Services examine people’s profile, background and tweeted pictures. Just to show how many data is available about somebody and that we can monitor the data the conference generates. These series of blog posts will explain how I got things working and how this code will evolve over time when visiting more conferences and adding new ideas. The approach I’ve been taking is to use a Micro-Service architecture. So every app runs in a container and is responsible for only one job. This will ensure that every part of the system can be scaled and monitored separately.There is still a lot of work to be done but its now a working example. I will need to improve for example my dockerfiles because at the moment my images seem to be rather big for the little work they do. I also would love to include some tests and health checks. And maybe even change the code to typescript. disclaimer: This code is still a work in progress so if you want to help out or just improve my bad coding style the check out the projects on github. - TwitStreamReader: This will read a certain value from the Twitter API. This can be a hashtag or a user for example. Then it will drop everything received in an Azure Storage table and place an item in a queue for further processing. - DataExtracter: The data returned from the Twitter API is a huge JSON object. The task of this app is to read the JSON and update the row in the table with the json converted to different columns and there values. After this is done it will add an item to another queue so that the next app will know which items are processed. - PictureTranslater: This is will read items from a queue and then starts to call the Microsoft Cognitive Services API with the URL of the profile image of the user it’s processing. The values returned will then be added to the row in the table. For every part of service there is also a docker container available from the docker hub.

Some design choices:

- I’ve chosen to use an Azure queue to let every service know which item to process next of which item is done processing. I could have used a messaging service for this like rabbitMQ but for now it’s a queue. - The JSON object retrieved from Twitter will now be extracted into a table. Luckily the Azure Table Storage is a noSQL database so every row can look different and have different columns. I could have just kept the JSON object and pass that around but this was a little bit easier to read when developing. I used the Azure Storage Explorer to examine what was in my Table Storage.

End Result

In the end I got it working in a real simple way. To quickly show the data I used PowerBI to visualize it in a clean way. This was super easy. I made the connection, put some columns on my canvas and I had my report. This will show what the people tweeted. Who they are. What the Cognitive Services API thinks of there profile picture and some other information. I hope the people in this screenshot don’t mind that I used there data for an experiment. In all fairness I did warn them and the data is already publicly available. But if they mind then they can always reach out to me and I will remove them. ![]()